When I start to use LSTM model, the first problem I faced was the model input format. Specifically, the input format is (# of samples, # of time steps, # of features). It looks not difficult but there are many tricky things inside. So this post is going to detailedly help understand LSTM model structure especially the input and output.

1. LSTM Structure

First and foremost, I think it is necessary to recap the structure of LSTM model. Here is my previous post link: RNN LSTM and GRU. After reading, you will realize that this article as well as lots of other LSTM related blogs focus mostly on the explanation of single LSTM unit (that is the core for sure) but hard to find the ones grabbing more general structure. So this time I want to emphasize on the overall structure of LSTM.

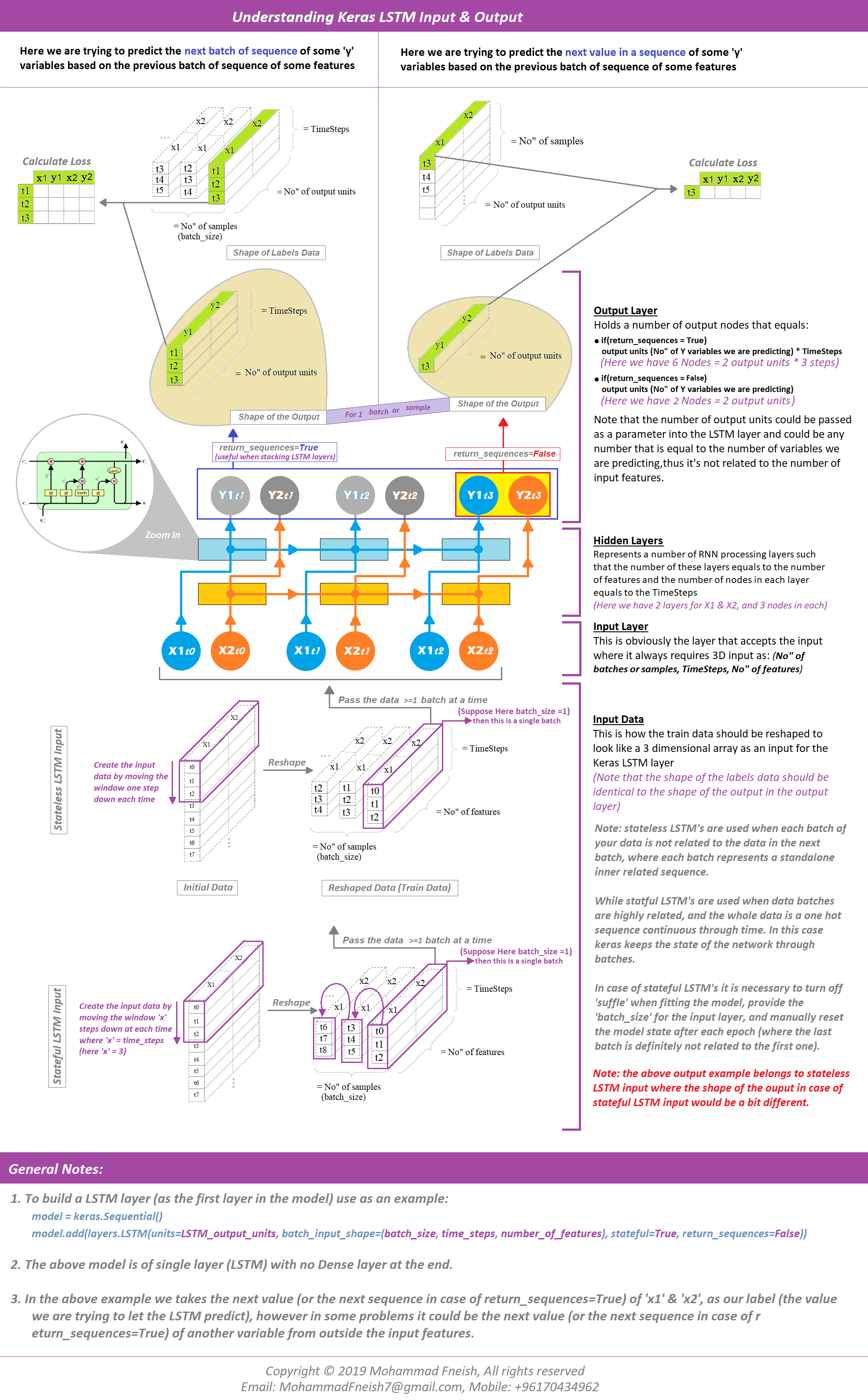

Without further ado, let's directly look at one really amazing diagram I found from Mohammad Fneish's github:

Let's look at the chart from bottom to the top and also summarized some bullet points:

Input Data

- For this specific input data, each sample has size \((3, 2)\) where 3 means 3 time steps and 2 means two features. Each sample will give a 2D array

- We have two type of hidden states and cell states initialization based on the characteristic of the batches. In stateless cases, LSTM updates parameters on batch1 and then re-initiate hidden states and cell states (usually all zeros) for batch2; while in stateful cases, it uses batch1's last output hidden states and cell sates as initial states for batch2. Therefore:

- Stateful LSTM is used when two sequences in two batches have connections (e.g. prices of one stock). And we need to specify the batch size (to make sure the state size is the same for each batch) at the same time make

shuffle = False. - Stateless LSTM used when the sequence in two batches has no closed relations (e.g. one sequence represents a complete sentence)

- Stateful LSTM is used when two sequences in two batches have connections (e.g. prices of one stock). And we need to specify the batch size (to make sure the state size is the same for each batch) at the same time make

Hidden Layer

- The number of LSTM node in the layer equals to the number of time steps

- Here I think despite of what this graph has showed, we can also stack the feature space in each time step, meaning combine \(X_{1,t_0}, X_{2,t_0}\) from size \((n, 1)\) to (# of sample in a batch, # of features), in that case we will only have one feature layer

Output Data

- Output data shape is decided by user when initialize the hidden state and cell state. It is easier to think each time step as a fully connected layer with 1 inputs and 32 outputs but with a different computation than FC layers.

- If you are about stacking multiple LSTM layers, use

return_sequences=Trueparameter, so the layer will output the whole predicted sequence rather than just the last value. In another word: make it true if you want to predict next sequence of labels for a batch, else make it false if you want to predict only next value of a batch

If you still feel little bit confused about each parameter's size, there is one really good explanation here: In LSTM, how do you figure out what size the weights are supposed to be?

2. LSTM With Time Step

After previous discussion I think we are pretty much confident with the LSTM's input and output. We can easily find out that the main difference between LSTM model input and other traditional models is the usage of # of time steps. So let's further look at this.

2.1 LSTM with one time step

For example we have sales data with \(p\) other related features. and in most cases the value in \(t\) time will also be related to values in \(t-1, t-2..\). So here we were thinking to add more features with the window method. Basically we take \(w\) lags into considerations. For example if here we take window length \(w = 3\), then our input data shape will be \((n, 1, p+3)\). And if we use this as input data for LSTM, it will work similar to basic MLP model since here we only have one time step so actually the benefit of using LSTM cannot be fully reflected (remember the power of it is to have long-term memory due to the cell state).

2.3 LSTM with time steps

Time steps provide another way to phrase our time series problem. Like above in the window example, we can take prior time steps in our time series as inputs to predict the output at the next time step. But still we deem each data sample an individual point and has no relationship with others.

So instead of phrasing the past observations as separate input features, we can use them as time steps of the one input feature, which is indeed a more accurate framing of the problem.

For example, if we have panel data (multi-group data across time), we can reshape the input data to: \((n \times m, t, p)\), here we suppose m is the number of groups, n is the row number for each group, t is the time steps for all groups (needs padding some time), and p is the number of features.

Reference:

https://machinelearningmastery.com/time-series-prediction-lstm-recurrent-neural-networks-python-keras/

https://www.quora.com/In-LSTM-how-do-you-figure-out-what-size-the-weights-are-supposed-to-be/answer/Ashutosh-Choudhary-2

https://github.com/MohammadFneish7/Keras_LSTM_Diagram