Object detection is the application combining of image classification and localization. We are now pretty familiar classification so this post will mainly talk about object localization.

1. Single Object Localization

First let's talk about single object localization which means there will be no overlapping between two objects.

1.1 Output format and loss function

Our target is to predict a bounding box that contains the object we have classified. So to let our model learn the location of each object, first we need to also provide these information in our training data. Here is an example of the output format: (suppose we have three classes) \[y^{(i)} = [p_c,b_x,b_y,b_h,b_w,c_1,c_2,c_3]\] Where \((b_x,b_y)\) is the coordinate of the object (bounding box) center; \(b_h,b_w\) is the height and weight of this bounding box; \(p_c\) is the probability of having object in this bounding box; \(c_1,c_2,c_3\) is the probability for each of the object.

In that case, the loss function will be : \[(\hat{p_c}-p_c)^2 + \cdots+(\hat{c_3}-c_3)^2 \ \ if\ p_c=1\] \[(\hat{p_c}-p_c)^2\ \ if\ p_c=0\]

1.2 Convolutional Sliding window with bounding box prediction

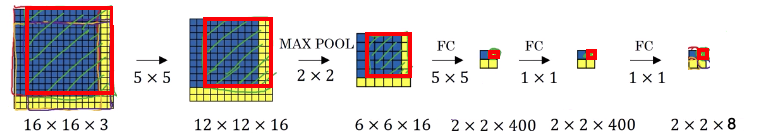

Then how can we find this bounding boxes? The simple way is to choose a window and slide it over the entire image and decide every time, but the cost will be very heavy. We surprisingly found that the operation of convolving actually is doing something similar -- if we deem filter as window in CNN, and the filter size is just the window size. Instead of running several subsets of the input image independently; it combines all subsets into one form of computation and shares a lot of the computation Below is a good illustration:

The red square represents one subset of the image and is also the size of the filter; so after passing several convolution and pooling layers, each of this subset (here we have 4) will result in one 1x1x8 vectors, which has the same output format as we discussed before.

1.3 Non-Max Suppression

One disadvantages about above method is that it may not give accurate bounding box because it really depends on the size of each filter; also, if the window size is small, it is very easy to have more than one bounding box for the same objects. In that case we need to use Non-Max Suppression.

- IoU(intersection over union) quantifies the degree of overlapping, it will be used in non-max suppression. Mathematically: \[IoU = \frac{\text{Size of intersection}}{\text{Size of union}}\]

- Steps of Non-max Suppression:

- Discard all boxes with \(p_c\le 0.6\)

- pick the box with largest \(p_c\) (targeting to one object now)

- discard any remaining box with \(IoU \ge 0.5\) (for this object, delete all other bounding boxes)

- Pick the larger \(p_c\) and do that again

2. Multi-Object Localization

Previously, for each subset of the image, we assume there will be only one object. But in reality it is not the case. Now let's talk about how to deal with the problem that one grid has two objects.

2.1 Anchor Box

The idea is fairly intuitive: we just add one more object's information into our output: \[y^{(i)} =(\underbrace{p_{c1},b_x,b_y,b_h,b_w,c_1,c_2,c_3}_{\text{Anchor box1}}, \underbrace{p_{c2},b_x,b_y,\cdots}_\text{Anchor box2}\]

2.2 Yolo whole flow

To put everything together, actually it is the algorithm named Yolo, meaning you only look once. Let's then make a summary about each steps of this algorithm:

- Train a CNN, output \(n\times n \times 2 \times 8\). Here \(n \times n\) tells us how many grid we have; 2 means two anchor box and 8 means we have 3 classes

- The make a prediction based on this CNN

- Finally do non-max suppression:

- For each grid cell, get 2 predicted bounding boxes

- Get rid of all the low probability predictions

- For each class, use non-max suppression to only choose one

2.3 Other techniques

There are some other techniques that are working well for object detection. Here are some brief intro:

- R-CNN: use a technique called region proposal, the idea is that since the large area of an image has no objects, we can use some segmentation algorithms first (result in ~ 2000 blobs) and then detect the object. Here rather than running our sliding windows on every single window, we instead only select a few windows.

- Faster R-CNN: Speed up R-CNN using convolution implementation of sliding windows to classify all the proposed regions

Reference: