Another important application for CNN is face verification and recognition.

Basically, face verification is a binary classification problem: aiming to verify whether this face is the targeted person or not; whereas face recognition is a multi-class classification problem: aiming to recognize if this face is any of the K persons or not. Let's then look at it in detail

1. Siamese Network

The difference between face verification and normal image classification is that for each class (person here) we will not have many training data, which we call it one-shot learning problem. That is, in which we must correctly make predictions given only a single example of each new class. Thus normal network may not be able to give good predictions.

Siamese Network then was created to fill this gap. The idea behind it is quite intuitive: we will train a NN to give us an encoding of the image, instead of trying to let the network extract the feature of each of the image and then classify, we now let the network to learn the similarities between each class. In another work, we only want to compare the similarities and differences among the people from our database, no need to let the network to learn more general things.

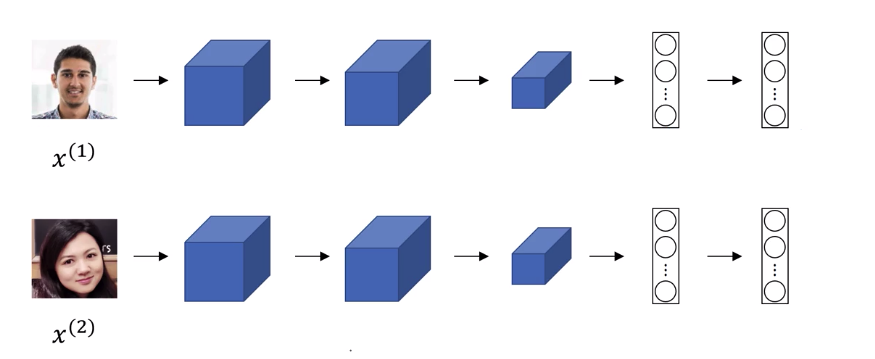

For example (picture below), we have an encoding for image \(x^{(1)}\), then we use the same network and parameter to get the encoding of \(x^{(2)}\). If they are same persons, then output encoding differences are small, otherwise it is big. Our network will follow this logic to minimize the loss and learn the parameters.

Then how to define this loss?

2. Triplet Loss

Triplet loss was then introduced to fit into the siamese network. The logic we are following is: if \(x^{(1)},x^{(2)}\) are same persons, we want their loss to be small (encoding difference to be small; here we use distance to quantify the similarities); otherwise we want their loss to be big. To achieve this goal, we need three images at a time as training example \((A^{(i)},P^{(i)},N^{(i)})\)

- A is an "Anchor" image--a picture of a person.

- P is a "Positive" image--a picture of the same person as the Anchor image.

- N is a "Negative" image--a picture of a different person than the Anchor image.

We'd like to make sure that an image \(A^{(i)}\) of an individual is closer to the Positive \(P^{(i)}\) than to the Negative image \(N^{(i)}\)) by at least a margin \(\alpha\):

\[\mid \mid f(A^{(i)}) - f(P^{(i)}) \mid \mid_2^2 + \alpha < \mid \mid f(A^{(i)}) - f(N^{(i)}) \mid \mid_2^2\]

We would thus like to minimize the following "triplet cost":

\[\mathcal{J} = \sum^{m}_{i=1} max\large[ \small {\mid \mid f(A^{(i)}) - f(P^{(i)}) \mid \mid_2^2} - {\mid \mid f(A^{(i)}) - f(N^{(i)}) \mid \mid_2^2} + \alpha , 0\large]\]

3. Summary

The key idea behind face verification and recognition are two techniques: Encoding and triplet loss. Remember from last article that Neural Style Transfer also uses encoding to get both the content and style information from an image. So first I want to make a small summary about why encoding is very important for CNN applications.

3.1 Encoding

Encoding is the key strategy to deal with image related problems. Reason: We cannot use pixel values directly cause it changes dramatically due to the variations in lighting, orientation and others.

Before Neural Networks, people use different techniques to do encoding or feature extraction and then follow by some classification models like SVM; Now due to the powerful feature extraction ability of CNN, we can use the output of a good pre-trained CNN (usually use a large number of images as training set) as the encoding output, and make comparison between them instead of the raw pixel values.

3.2 Aplication Pipeline

- Create Training data \((A^{(i)},P^{(i)},N^{(i)})\)

- Train CNN model to minimize triplet loss. Notice the actual input data is only the anchor image but to minimize the loss we then need \(P^{(i)}\) and \(N^{(i)}\)

- After training the model:

- Face verification: Calculate the distance between the encoding of this new image and the matched image encoding. Then compare it with the threshold to make decision.

- Face Recognition: calculate the distance between the encoding of this new image and all other image encoding (all people we have for training). Then find the one with smallest distance. Finally compare this distance with the threshold to make a decision.

Reference:

https://www.cs.cmu.edu/~rsalakhu/papers/oneshot1.pdf

DeepLearning.ai